13 December 2025 - Infrastructure

A year ago, we wrote about Patela v1, our diskless Tor relay orchestration system built for exit nodes running on System Transparency’s stboot. The core idea was simple: nodes boot from read-only images, generate their own keys, and encrypt the keys locally and back them up on the server. In this post, we present an updated architecture that attests the nodes’ TPMs and removes the need for backups.

In v1, node identity was based on mTLS certificate digests. We embedded client certificates at compile time using a script, and the SHA-256 hash of each certificate became the node’s database identity.

// V1: Identity = hash(certificate)

let node_id = sha256(client_cert);

The authentication flow was the following: the client presents a certificate, the server validates it against its CA, then the server uses the cert digest as a database key. However, it was both cumbersome and not what we ultimately wanted to achieve; we used it as a shortcut to start the early testing. Instead, the goal was to have the keys directly in the TPM, so that they could be stored inside the chip and never backed up, having them bound to the hardware, without requiring any disk.

V2 replaces this by making the TPM the source of truth:

// V2: Identity = (EK_public, AK_public, AK_name)

let node_identity = (ek_public, ak_public, ak_name);

Here’s how it works:

The server stores this triplet in the database:

CREATE UNIQUE INDEX idx_nodes_tpm_identity

ON nodes(ek_public, ak_public, ak_name);

Now, authentication to the configuration server requires physical possession of that specific TPM chip.

We don’t validate EK certificates against TPM manufacturer CAs yet: when a node first connects, the server has no cryptographic proof the EK came from real hardware versus a software emulator. It’s on our todo list, but it doesn’t add a lot of guarantees in itself for our use case.

We then use TOFU: new nodes are created with enabled=0. An administrator should know when a new node is supposed to register, and can just run patela node enable <node_id> before the node can authenticate. This prevents random devices from auto-joining the network, while keeping the whole flow mostly automated.

The flow was this:

This approach enabled diskless Tor relays to maintain a persistent identity. Tor relays have reputation and are trusted in the network based on a few parameters, but mostly their stability and the time they’ve been running. Keeping long term keys is fundamental for our relays to be useful. The difference is that now the actual relay keys live only in TPM persistent storage, removing the requirement of backups. In the end, hardware failures should be rare enough for this type of binding to make sense.

As we have not fully integrated Tor with the TPM, the key is currently stored as a byte string in the non-volatile storage, meaning it is still possible to export it. As the TPM standard does not support operations for Ed25519, this is unlikely to change in the short term, though we acknowledge it is suboptimal.

In V1, every relay got the same hardcoded torrc template, string-formatted:

// V1: One template to rule them all

let torrc = format!(r#"

Nickname {name}

ORPort {ip}:{or_port}

DirPort {dir_port}

ContactInfo your@email.com

ExitPolicy reject *:*

...

"#, name = relay.name, ip = relay.ip_v4, ...);

This worked until it didn’t, as per-relay customisation was difficult to manage: some nodes needed custom ExitPolicy rules, while others needed different bandwidth limits depending on upstream, and in more rare circumstances, custom ports. Every configuration change meant code changes, recompilation, redeployment.

Now V2 introduces a configuration cascade:

│┌────────────────────────┐ O ││ Default Config │ v │└───────────┬────────────┘ e │ │ r │┌───────────▼────────────┐ r ││ Per-machine Config │ i │└───────────┬────────────┘ d │ │ e │┌───────────▼────────────┐ ││ Per-instance Config │ ▼└────────────────────────┘

This is directly translated into the database schema:

-- Global defaults for all relays

CREATE TABLE global_conf (

id INTEGER PRIMARY KEY,

tor_conf TEXT, -- torrc format

node_conf TEXT -- JSON for network settings

);

-- Per-node overrides

ALTER TABLE nodes ADD COLUMN tor_conf TEXT;

ALTER TABLE nodes ADD COLUMN node_conf TEXT;

-- Per-relay overrides

ALTER TABLE relays ADD COLUMN tor_conf TEXT;

When a client boots, the server resolves the configuration hierarchy:

// Pseudo-code for config resolution

let config = global_conf

.merge(node_conf) // Node overrides global

.merge(relay_conf); // Relay overrides everything

We wrote a torrc parser (server/src/tor_config.rs) to handle Tor’s configuration format. The parser validates against known Tor options and merges configs intelligently, as later values override earlier ones.

Now the workflow looks like this:

# Set global defaults (one time)

patela torrc import misc/default.torrc default

# Override ContactInfo for basement nodes

echo "ContactInfo basement@example.com" >> basement.torrc

patela torrc import basement.torrc node --id 3

# Give one relay extra bandwidth

echo "RelayBandwidthRate 100 MB" >> high-bandwidth.torrc

patela torrc import high-bandwidth.torrc relay --id murazzano

Configuration changes are just database updates, as the nodes will fetch the most recent one associated with them from the server at startup.

The protocol uses TPM2’s make_credential / activate_credential challenge-response:

EK_public, AK_public, AK_nameencrypted_session_token = make_credential(EK_public, AK_name, session_token)session_token = activate_credential(encrypted_session_token)The session_token is a Biscuit bearer token. Only the TPM with the matching EK can decrypt it via activate_credential. If the client successfully returns the decrypted token, the server has cryptographic proof the client possesses the specific TPM hardware.

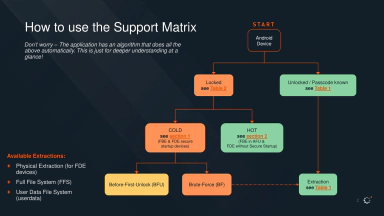

We have two end goals, which will take some more development time and understanding. The first one is to seal the TPM secrets and storage via measured boot, and complete the setup with System Transparency and coreboot. It is ambitious, but in the end the systems should look like the following:

This would make the system resistant to physical compromises: even if the server is physically seized, and if a different firmware or bootloader is run, then the TPM wouldn’t unseal, making the keys non-recoverable in any alternate setup.

There are also some more simple usability improvements in our todo list: an example is how to properly display to operators config diffs between global/node/relay configs before applying.

The code is on GitHub: osservatorionessuno/patela

To run a v2 development setup:

# Server setup

export DATABASE_URL="sqlite:$PWD/patela.db"

cargo sqlx database reset --source server/migrations -y

# Set required config

cargo run -p patela-server -- torrc import misc/default.torrc default

cargo run -p patela-server -- node set ipv4_gateway 10.10.10.1 default

cargo run -p patela-server -- node set ipv6_gateway fd00::1 default

# Run server

cargo run -p patela-server -- run

# Client setup (requires TPM2 or swtpm emulator)

export TPM2TOOLS_TCTI="swtpm:host=localhost,port=2321"

cargo run -p patela-client -- run --server https://localhost:8020

# Approve the node (from server terminal)

cargo run -p patela-server -- list node

cargo run -p patela-server -- node enable 1

The code is still small at around 6000 lines Rust across client and server, readable (enforced by cargo fmt and clippy), and partially documented. We are also still working on it, as anticipated, we will continue exploring until our setup is as robust as we want it to be.

This wouldn’t be possible without:

Bugs, questions, patches: github.com/osservatorionessuno/patela/issues

You’ve read an article from the Infrastructure section, where we describe our material and digital commitment to an infrastructure built as a political act of reclaiming digital resources.

We are a non-profit organization run entirely by volunteers. If you value our work, you can support us with a donation: we accept financial contributions, as well as hardware and bandwidth to help sustain our activities. To learn how to support us, visit the donation page.