5 May 2025 - Infrastructure

The Osservatorio has finally activated its first experimental nodes on a diskless infrastructure, physically hosted in our own space and designed for low power consumption. In this post, we explain the reasons that led us to work on this project and the implementation we developed. We are also releasing the source code and documentation for the software we wrote, which we will continue to improve and which we hope will be useful for replicating our setup.

As we’ve often pointed out, running Tor nodes involves certain risks and fairly common issues—often due to the fact that, in the event of investigations or other problems, the authorities are not always competent enough to understand how Tor works, or, even if they are, they may still choose to act indiscriminately despite knowing they’re not targeting the actual party under investigation. There are many precedents: in Austria, in Germany, in the United States, in Russia, and likely many others. Some of our members have personally experienced that this can also be the case in Italy.

In order to protect ourselves, our infrastructure, and the anonymity and privacy of our users, we must therefore consider a range of possible scenarios, including:

In the first case, the main obstacle is the constant stream of abuse reports and complaints about the activity of our network. In order to ensure our operations, as we’ve mentioned in previous posts, we now manage our own network with IP addresses we own, routed directly to our physical location—minimizing, as much as technically possible (for now!), the number of intermediaries with access or control over our infrastructure.

Since the beginning of the project, we’ve followed two main intuitions: the first is that by approaching classic problems in less conventional ways, we might find solid solutions and also pave the way for other organizations and projects (like buying a physical space!). The second is that, to keep interest alive, both among our members and externally, our activities must be fun, engaging, and innovative.

As already mentioned, we operate a router at a datacenter in Milan that announces our AS and IP space. It is currently connected to our basement via an XGS-PON 10G/2.5G link, but our goal is to expand our presence geographically and, where possible, reuse the same network resources (we’ll share more updates on this soon).

One of the key elements of this architecture is that we only trust machines to which we have exclusive access. This means that even our main router at the MIX in Milan is considered potentially untrustworthy. For this reason, our main focus is on our datacenter in Turin.

System Transparency is a project originally funded by Mullvad for their VPN infrastructure, and now actively developed and maintained by Glasklar Teknik.

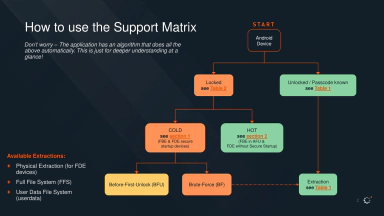

The goal of the project is to develop a set of tools to run and certify transparent systems. The idea is as follows: first, system images must be reproducible—that is, ISO files or similar images should be bit-for-bit rebuildable by anyone from source code, in order to prove the absence of tampering (or backdoors). Second, everything needed to reproduce these images must be public and well-documented, as should the images themselves. Finally, at least two additional properties must be ensured: first, only authorized system images should be allowed to run; second, there must be a public, immutable list of all authorized images (a so-called transparency log).

To summarize, in sequence, the concept of system transparency requires:

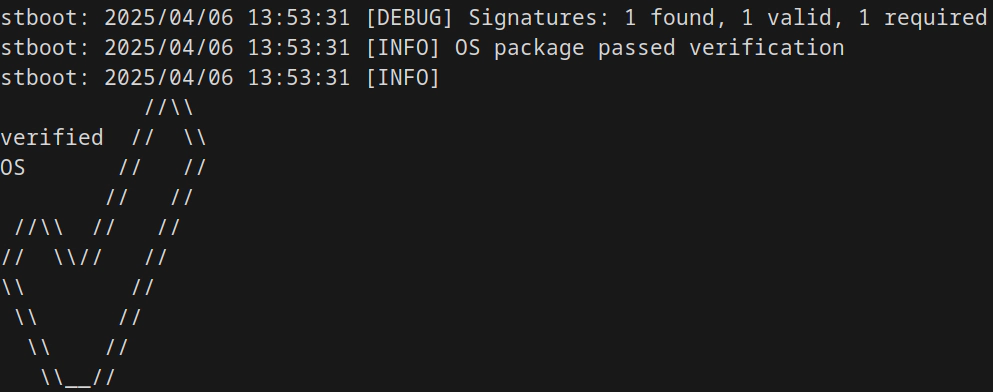

Among the various tools provided by the System Transparency project, stboot does most of the heavy lifting. The server boots from a minimal local image which, thanks to stboot, downloads and verifies the next stage: a reproducible and signed system image intended to run Tor (or, in the future, other services). Because of the signature verification, we can safely host these images remotely or in the cloud without major security concerns.

Since the diskless system is still experimental—and although our goal is to make the entire infrastructure easily replaceable—we still run a few services and devices in a more traditional setup:

The problem with diskless software is that it doesn’t remember anything—not even the few configurations or keys needed to function correctly. In our case, there are just a few essential configurations for a Tor relay:

/etc/torrc/lib/tor/keys/*These configurations also need to be generated on the machine and updated later. For example, we need to be able to update the list of nodes that make up our family. Clearly, maintaining a separate OS image for each machine would be both impractical and hard to maintain.

While tools like ansible-relayor exist, we chose a different approach based on practical and security considerations. We prefer each node to generate its own keys and manage its own configuration, and for the configuration to be applied in pull mode rather than push. In other words, the node itself periodically checks for configuration updates, and only the node should have control over its keys. This is an important security distinction: a compromised configuration server should not be able to affect the security of the node or its keys.

And that’s how patela was born.

Patela is a minimal software tool that downloads and uploads configuration files to a server. The server communicates network configurations (primarily assigning available IPs and the gateway via an API), and the client reads and applies them. All other files that would normally need to persist between reboots are encrypted locally using the TPM and then uploaded in encrypted form to the configuration server. This way, the configuration server never has access to the machine’s keys and cannot directly compromise it—except possibly through Denial of Service, such as distributing invalid IPs or corrupted backups.

The system offers the following advantages:

Since Tor is being rewritten in Rust, and will soon offer everything needed to replace the classic C-Tor implementation even for relays, we decided to align with this and adopt the same language for better future compatibility.

During development, it became clear that a flexible toolchain was essential, especially given the need to use C libraries and support multiple platforms. While patela’s architecture isn’t conceptually very complex, making a piece of software like this maintainable over time, with so many components, is no easy task. Fortunately, the Rust ecosystem and its tooling have matured significantly in recent years, now offering almost everything we need. In practice, we aim to build both a client and a server, and we need:

arm64, x86_64)Containers are often used to meet requirements like ecosystem consistency. However, our goal is to build a simple, lightweight architecture that works on low-power machines and can modify global network configurations.

There are also several things we explicitly want to avoid, some due to personal preference, others based on our chosen constraints:

Makefile to cover all these goals would likely be longer and more complex than writing the project itselfAnd here are the projects that helped us achieve all of this with just a few lines of code and relatively little effort:

Once the patela binary is compiled—with all required assets included—it’s enough to copy it into the system image before signing and distributing it.

As mentioned earlier, we use constgen, which makes it easy to generate compile-time constants, for example, embedding the client’s SSL certificates and the Tor configuration template.

let server_ca_file = env::var("PATELA_CA_CERT").unwrap_or(String::from("../certs/ca-cert.pem"));

let client_key_cert_file = env::var("PATELA_CLIENT_CERT").unwrap();

let server_ca = fs::read(server_ca_file).unwrap();

let client = fs::read(client_key_cert_file).unwrap();

let const_declarations = [

const_declaration!(pub SERVER_CA = server_ca),

const_declaration!(pub CLIENT_KEY_CERT = client),

]

.join("\n");

Although cargo supports cross-compilation, when using external C dependencies like tpm2-tss, we must ensure that the libc used during compilation is compatible with the one present in our system images. As mentioned earlier, the Zig compiler—integrated directly with cargo via cargo-zigbuild—allows us to specify the target libc version, along with the architecture and kernel:

$ cargo zigbuild --target x86_64-unknown-linux-gnu.2.36

We’ve previously discussed how to run multiple Tor servers on the same network interface with different IPs using a high-level firewall like Shorewall.

Now we’ve applied the same rules using nftables, the native Linux interface for writing network rules.

Two rules are required: one to match packets by user ID, and another to configure source NAT.

$ nft 'add rule ip filter OUTPUT skuid <process id> counter meta mark set <mark id>'

$ nft 'add rule ip filter <interface> mark and 0xff == <mark id> counter snat to <source ip>'

The rules are therefore applied directly by patela, using the nftl library.

We use Mutual TLS (mTLS) for communication. Unlike traditional TLS, where only the client verifies the server, mTLS involves mutual authentication. This offers a double benefit and elegantly solves multiple problems: on the one hand, TLS natively provides transport security and certificate revocation mechanisms; on the other, client certificate authentication allows us to uniquely identify each node or machine. We can then save and track metadata such as the assigned IP, name, and other related info in the server’s database.

The main drawback of mTLS is managing certificates and their renewal. Everyone gets one bold choice per project — otherwise, where’s the fun? Ours was Biscuit, a token-based authentication system similar to JWT. The only reason we use a session token is to avoid authenticating every single API endpoint.

On the client side, the magic lies in the TPM. Libraries and examples are often sparse, and working with TPMs can be frustrating.

In our case, we need a key to survive across reboots, because it’s the only persistent element on the server.

We use a Trust On First Use (TOFU) approach: on first boot, the client generates a primary key inside the TPM and uses it to encrypt a secondary AES-GCM key, which is used to encrypt configuration backups. The AES-GCM key is then stored on the server, encrypted with the TPM. This means only the physical node can decrypt its backup. To revoke a compromised node, we simply delete its encrypted key from the server. The logic for detecting whether a TPM has been initialized runs entirely on the client and is implemented in patela.

In the future, directly integrating relay long-term keys with arti could be a better and more efficient solution, especially if combined with a robust measured boot process to control unsealing.

This post summarizes what we consider just the first phase of our project. We know there’s still a lot to do and improve, and we’d like to share our current wishlist:

We’ve launched four exit nodes, running with patela and System Transparency:

All four are running on a single Protectli with coreboot, pushing over 1 Gbps of effective total bandwidth.

You’ve read an article from the Infrastructure section, where we describe our material and digital commitment to an infrastructure built as a political act of reclaiming digital resources.

We are a non-profit organization run entirely by volunteers. If you value our work, you can support us with a donation: we accept financial contributions, as well as hardware and bandwidth to help sustain our activities. To learn how to support us, visit the donation page.